Hello, fellow coding enthusiasts! In our continuous exploration of the captivating realm of functional programming, we’ve delved into the concept of Java Streams. However, today’s rendezvous has a unique twist—we’re lifting the veil off a common misconception. Brace yourselves as we delve into the heart of streams and uncover their true essence.

Table of contents

Open Table of contents

Sections

Setting the Stage

In our previous lectures, we’ve showcased the wonders of stream pipelines through a recurring example involving a list of books. We’ve devised pipelines to filter out popular horror books and popular romantic books, exuding the elegance of functional programming. Now, it’s time to transition from theory to practice and actually execute these pipelines.

Unveiling the Pitfall

Before we proceed, a pivotal aspect requires clarification—the nature of streams. Many perceive streams as analogous to traditional collections, laden with data elements that can be accessed and manipulated multiple times. However, this perception couldn’t be farther from the truth. Streams are not containers, nor are they repositories that house data. They are more akin to a one-time magic spell that conjures a sequence of transformations.

Stream Immutability

Consider streams as ethereal entities that materialize just long enough to guide us through a series of operations. Once a stream is operated upon—filtered, mapped, reduced—it becomes transient and unusable. This characteristic stems from the immutability of streams. A stream cannot be altered once it’s activated. You can’t add or remove elements to/from a stream like you can with collections.

A Peek into Stream Pipelines

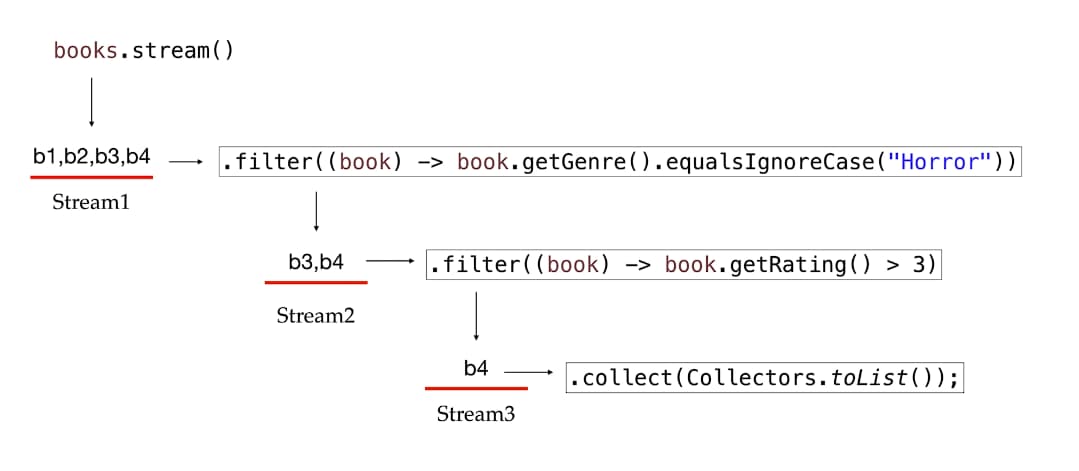

Let’s revisit our pipeline example to understand this behavior more vividly. Imagine a stream as a conveyor belt moving books along. At each station (operation), books are transformed or selected. But here’s the catch—once a book arrives at a station, it’s irreversibly altered to fit the new criteria. That book can’t revisit a previous station. By the time the last station is reached, only a few books might remain. The rest have metamorphosed into something different, something suited to the station’s criteria.

A Paradigm Shift in Thinking

When you write a stream pipeline, remember that you’re crafting a sequence of transformations, not manipulating a collection. The notion of reuse is replaced with the art of creating streamlined data transformation workflows. And while method chaining might make it appear that a single stream is being transformed, it’s actually a succession of new streams being generated. Each new stream encapsulates the changes from previous stations and sets the stage for subsequent ones.

Wrapping Up the Revelation

As we execute our example pipelines, we’re left with a deeper understanding of the transient, transformational nature of streams. Streams are your allies when you’re dealing with a sequence of data processing steps. They gracefully handle data transformation and filtering, making your code cleaner and more concise. However, always remember that streams are more akin to magic spells than treasure chests, enabling a sequence of operations that mold your data to perfection.

With this newfound clarity on the ethereal nature of streams, we move forward in our journey of functional programming with enriched insights. Our future endeavors will undoubtedly be guided by the wisdom that streams aren’t mere data containers, but the architects of elegant data transformations.

Stay tuned for more enlightening insights as we continue to explore the fascinating landscape of functional programming. Until then, embrace the magic of streams, and may your code transform as elegantly as the streams themselves!

You can find the repo for this section of the course Here